In the fast-evolving landscape of artificial intelligence, one architecture has captured the imagination of researchers, developers, and enthusiasts alike - the Generative Pre-trained Transformer (GPT). Developed by OpenAI, GPT has redefined the possibilities of natural language processing and understanding. In this blog, we'll embark on a journey to unravel the intricacies of GPT architecture, exploring its components, training methodology, and the impact it has made on various applications.

Understanding GPT Architecture

1. Transformer Architecture

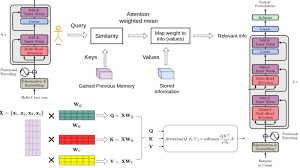

At the heart of GPT lies the Transformer architecture, introduced by Vaswani et al. in the seminal paper "Attention is All You Need". This architecture relies on self-attention mechanisms, allowing the model to weigh the importance of different words in a sentence. This innovation revolutionized sequence-to-sequence tasks, making it a cornerstone in various natural language processing (NLP) applications.

2. Pre-training and Fine-tuning

GPT's pre-training phase involves exposing the model to vast amounts of text data, enabling it to learn the intricacies of language. This pre-trained model is then fine-tuned on specific tasks, making it adaptable to a wide array of applications, from text completion to question-answering systems.

3. Layered Architecture

GPT is comprised of multiple layers, each containing self-attention mechanisms and feedforward neural networks. The sheer depth of the model, with the latest versions having hundreds of layers, contributes to its remarkable ability to understand context and generate coherent and contextually relevant text.

GPT in Action

1. GPT-3: The Flagship Model

GPT-3, the third iteration of the architecture, is a behemoth with 175 billion parameters. This massive scale enables it to perform an array of tasks, including text completion, translation, code generation, and even composing human-like text. OpenAI's playground provides a glimpse into GPT-3's capabilities, allowing users to interact with the model and witness its versatility firsthand.

2. GPT Applications

GPT has found applications across diverse domains. It powers chatbots, virtual assistants, and facilitates the generation of creative content. OpenAI has showcased GPT's prowess in writing Python code and even generating music.

Challenges and Ethical Considerations

The power of GPT comes with its own set of challenges. Issues like bias in language generation, potential misuse, and the environmental impact of training such large models have sparked discussions within the AI community. OpenAI is actively addressing these concerns and iterating on models and policies to ensure responsible use.

Resources for Further Exploration

OpenAI's GPT Documentation: OpenAI provides comprehensive documentation on GPT, offering insights into its architecture, usage, and guidelines. OpenAI GPT Documentation

Attention is All You Need: Read the foundational paper introducing the Transformer architecture. Attention is All You Need

GPT-3 Research Paper: Delve into the details of GPT-3's architecture and capabilities. Language Models are Few-Shot Learners

Conclusion

GPT architecture stands as a testament to the rapid advancements in natural language processing. Its ability to understand context, generate coherent text, and adapt to various tasks has opened new frontiers in AI applications. As we navigate the evolving landscape of AI, GPT continues to be at the forefront, shaping the way we interact with and leverage the power of language models.